Our Products and Services

Neuron network model

The Artificial Neural Network (ANN) is a model of information processing that mimics the way biological neurons are processed. It is made up of a large number of elements (called processors or neurons) connected to each other through links (called link weights) that work as a unified body to solve a particular problem.

Neuron network model

- A neuron is an information processing unit and is the basic component of a neural network.

- The basic components of an artificial neuron include: Input signals of neurons are usually introduced as a vector. Each link is represented by a weight (called synaptic weight).The weighting that binds the signal on Tuesday to the neuron is noteded wjk. Typically, these weights are naturally initiated at the time of network initiation and are constantly updated during learning.

- Summing function: Usually used to calculate the sum of inputs with its associated weighting. Threshold (also known as a bias): This threshold is usually inserted as a component of the transmission function.

- Transfer function – also known as activation function: This function is used to limit the output range of each neuron. It receives input as a result of the sum function and the given threshold. Typically, the output range of each neuron is limited to paragraphs [0.1] or [-1, 1]. Very diverse transmission functions are listed in table 1.1, be it linear or nonlinear functions. The choice of which function to transmit depends on each problem and experience of the network designer.

- Output: The output signal of a neuron, with each neuron will have a maximum of one output.

- Similar to biological vectors, artificial neurons also receive input signals, process (multiply these signals by the weight, calculate the sum of the collected and send the results to the transmission function), and give an output signal (as a result of the transmission function).

- Artificial neural networks are built to simulate how the human brain works. For a normal neural network, each x input event is processed independently and gives the corresponding output without the exchange of information collected at each x input in the network.

LSTMs Network:

- LSTMs (Long Short Term Memory Networks) were introduced by Hochreiter & Schmidhuber (1997), which was later improved and popularized by a lot of people in the industry. They work extremely effectively on many different problems, so they have become as popular as they are today.

- LSTMs are designed to avoid long-term dependency. Remembering information for a long time is their default characteristic, but we don't need to train it to be able to remember. That is, its own within can be remembered without any intervention.

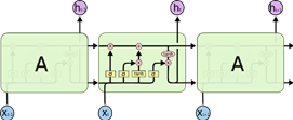

Every serial network takes the form of a series of repetitive modules of the traditional network. With standard RNNs networks, these modules have a very simple structure, usually a tanh activated function layer.

- ImechANN can learn from its experience to process, classify and predict time series with very long indefinite time lag between input episodes. With the LSTM structure, ImechANN also has a line-like structure, but the repeat module has a more distinctive structure than the standard Recurrent Neural Network. Instead of having a single layer of neural networks, they have four, interacting in a very special way. These include many structures (made up of a sigmod activation function and a multiplication) called a port, a way to optionally allow information to pass through (protecting and controlling the cell state). They decide what new information will be stored, updated to the cell, or exported for the next step through sigmoid and tanh activation functions.

- ImechANN can learn from its experience to process, classify and predict time series with very long indefinite time lag between input episodes. With the LSTM structure, ImechANN also has a line-like structure, but the repeat module has a more distinctive structure than the standard Recurrent Neural Network. Instead of having a single layer of neural networks, they have four, interacting in a very special way. These include many structures (made up of a sigmod activation function and a multiplication) called a port, a way to optionally allow information to pass through (protecting and controlling the cell state). They decide what new information will be stored, updated to the cell, or exported for the next step through sigmoid and tanh activation functions.